Cloud cost optimization often starts with engineering teams that expect to save time and money with a do-it-yourself (DIY) approach. They soon discover these methods are much more time-consuming than initially believed, and the effort doesn’t lead to expected savings outcomes. Let’s break down these DIY methods and explore their inherent challenges.

Introduction

One challenge cited in The State of FinOps data remains constant, despite great strides in FinOps best practices and processes. For the past three years, FinOps practitioners have experienced difficulty getting engineers to take action on cost optimization recommendations.

We don’t think they have to.

What prevents engineering and finance teams from achieving optimal cloud savings outcomes?

According to the State of FinOps data, from the FinOps Foundation:

- Teams are optimizing in the wrong order. Engineers typically default to starting with usage optimizations before addressing their discount rate. This guide explains why you should, in reality, begin with rate optimizations, specifically with ProsperOps.

- Engineers have too much to do. Relying on them to do more work isn’t helpful and doesn’t yield desired results.

- Cost optimization isn’t the work engineers want to do. Is cost optimization the best use of engineering time and talent?

According to engineers performing manual cloud cost optimization work:

- Recommendations from non-engineers are over-simplified

- An instance may be sized not to meet a vCPU or memory number, but rather to satisfy a throughput, bandwidth, or device attachment requirement. Therefore from a vCPU/RAM standpoint an instance may look underutilized, but it cannot be downsized due to a disk I/O requirement or other metric not observed by the recommendation process.

- A lookback period that is too brief will produce poor recommendations, for example if the lookback period the rightsizing recommendations are based upon is 60 days, and the app has quarterly seasonality, then the recommendation may not capture the ‘high water mark’ or seasonal peak. In these cases, the application would ideally be modified to scale horizontally by increasing instance quantity as needed, but that is not always possible with legacy or monolithic applications.

- Other than engineers, most people in the organization are unaware of the impact of each recommendation.

- For instance, engineering may be asked to convert workloads to Graviton due to the cost savings opportunity, however that would require that all installed binaries have a variant for the ARM microarchitecture, which may be the case for the primary application, but may not be available for other tools required by the organization, such as a SIEM agent, DLP tool, antivirus software, etc.

- Another common occurrence in which it may look like instances are eligible for downsizing is when a high availability application has a warm standby (typically at the DB layer) which is online due to a low RTO but is only synchronizing data and not actively serving end users.

Cloud Cost Optimization 2.0 Mitigates FinOps Friction

In a Cloud Cost Optimization 2.0 world, cost-conscious engineers build their architectures smarter—requiring fewer and less frequent resource optimizations.

But to achieve this, the discount management process (the financial side of cloud cost optimization) must be managed efficiently. Autonomous software like ProsperOps eliminates the need for anyone, including engineers, to manage rate optimization—the lever that produces immediate and magnified cloud savings.

Engineers should protect and reclaim their time, and let sophisticated algorithms do the work.

But we also recognize that engineers are expert problem solvers, and some may not see the need to yield control to an autonomous solution. This guide outlines your options and the reality of various cost-optimization methods, starting with a manual do-it-yourself approach.

Part 1: The Ins-and-Outs of Manual DIY Cloud Cost Optimization

In the cloud, costs can be a factor throughout the software lifecycle. So as engineers begin to work with cloud-based infrastructure, they often have to become more cost-conscious. It’s a responsibility that falls outside their primary role, yet they often have to decide how to mitigate uncontrollable cloud costs and optimize what falls in their domain—resources.

Many teams use in-house methods to do this, which may include manual DIY methods and/or software that recommends optimizations.

Manual Cloud Cost Optimization Methods

These options are usually perceived to be low-cost, low-risk, community-based, open-source, or homegrown solutions that address pieces of optimization tasks.

Engineering teams may go this route because they don’t know the reality of a manual DIY optimization experience or the tools that offer the best support. They may oppose third-party tools or believe they can manage everything in-house.

Standard DIY manual methods to identify and track resources and manage waste are usually handled with a combination of:

- Spreadsheets

- Scripts

- Alerts and notifications

- Tagging to define and identify resources using AWS Cost Explorer

- Native tools like AWS Trusted Advisor, Amazon CloudWatch, and AWS Compute Optimizer, AWS Config, EventBridge, Cost Intelligence Dashboards, AWS Budgets, etc.

- Forecasting

- Rightsizing and other resource strategies

- Writing code to automate simple tasks

- Custom dashboards, based on CUR data

Regarding cloud purchases, some engineers may manually calculate to verify that cloud service changes and purchases fall within the budget.

Whatever the method, a typical result is this: it’s time-consuming to act on reported findings.

Manual DIY Challenges

Getting Data and Providing Visibility for Stakeholders

With a manual approach, data lives in different places, and there is a constant effort to assimilate it for decision-making. There isn’t centralized reporting or a single pane of glass through which to view data. Data is hard to collect and is often managed in silos, so multiple stakeholders cannot see the data that matters to them.

Given this constraint, it’s easy to see how DevOps teams can struggle to validate that they are using the correct discount type, coverage of instance families and regions, and/or commitment term.

It wastes Time and Resources

Cloud costs need to be actively managed. But engineers shouldn’t be the ones doing it.

Requiring engineers to shift from shipping product innovations to manual cloud cost optimizations is an inefficient use of engineering resources. Establishing a FinOps practice, however, helps reallocate and redistribute workload more appropriately. It’s a better strategy.

Processes Don’t Scale

Then there’s the issue of working with limiting processes, like using a spreadsheet to track resources. Spreadsheets offer a simple, static view that doesn’t capture the cloud’s dynamic nature. In practice, it’s challenging to map containers and resources or see usage at any point in time, for example.

Who is managing the costs when multiple people are working in one account? As organizations grow, it becomes more challenging to identify the point of contact managing the moving parts of discounts and resources.

With DIY, one person or a team may be absorbed in time-consuming data management and distribution with processes that don’t scale.

Missed Savings Opportunities and Discount Mismanagement

Many companies take advantage of the attractive discounts Savings Plans provide for AWS cloud services. The trade-off for the discount is a 12-month or 36-month commitment term. Without centralized, real-time data, engineering teams are more likely to act conservatively and under-commit, leaving the company exposed to higher on-demand rates.

Cloud discount instruments, like Savings Plans and Reserved Instances, offer considerable relief from on-demand rates. Managing them involves the coordination of the right type of instrument, which:

- Is purchased at different times

- Covers different resources and time frames

- Expires at different times

- Can change resource footprint or architecture

Managing discount instruments is a dynamic process involving a lot of moving parts. It’s extremely challenging to coordinate these elements manually. That’s why autonomous discount management successfully produces consistent, incremental savings outcomes, an approach we’ll cover later in this guide.

Prioritizes Waste Reduction

Engineering teams naturally focus on reducing waste because it’s familiar ground—and work they are already doing. But it takes effort and time to identify and actively manage waste, which is constantly being created.

And, surprisingly, reducing waste in the cloud doesn’t affect savings as much or as fast as optimizing discount instruments, also called rate optimization. Consider waste #3 on the list of optimization priorities.

The reality of manual DIY optimization is more challenging than many engineering teams expect. Software alleviates some of these pain points.

Cloud Cost Management Software Generates Optimization Recommendations. Is that Enough?

Most cloud optimization software helps teams understand opportunities for optimization. They collect, centralize and present data:

- Across accounts and organizations

- From multiple data sources like native tools, including Amazon Cost and Usage Report, Amazon CloudWatch or third parties

- From a single customizable pane of glass for various stakeholders

These solutions centralize reporting so that stakeholders have a more comprehensive, accurate view of the environment and potential inefficiencies.

Recommendations-Based Software Challenges

It’s Still a Manual Process

The problem is that although engineering teams are being given directives on optimization, they still have to vet the recommendation and physically do the optimization work.

Engineers have to validate the recommendation based on software and other requirements, which could involve coordination between various teams. And once a decision has been made, the change is usually implemented manually.

Limited Savings Potential

Most solutions recommend one discount instrument as part of their optimization recommendation.

Without a strategic mix of discount instruments or flexibility to accommodate usage as it scales up and down or scales horizontally, companies will end up with suboptimal savings outcomes.

Risk and Savings Loss

The instruments that offer the best cloud discounts also require a one-year or three-year commitment. Even with the data assist from software, internal and external factors make it challenging to predict usage patterns over one-year or three-year terms.

As a result, engineering teams may unintentionally under or over-commit, both of which result in suboptimal savings.

Neither DIY Method Solves the Internal Coordination “Problem”

Operating successfully—and cost-effectively—in the cloud involves ongoing communication, education and coordination between engineers (who manage resources) and the budget holder (who may serve in IT, finance or FinOps).

This is the case whether teams are using manual DIY methods or recommendation software. The coordination looks like this:

- The budget holder sees an optimization recommendation

- The engineer validates if it is viable

- If the recommendation involves resources, the engineer takes action to optimize

- If the recommendation involves cloud rates, the budget holder or FinOps team takes action to optimize

Both DIY methods involve a lot of back-and-forth exchanges and manual effort to perform the optimization.

It’s possible to consolidate steps in this process (eliminating the back-and-forth) and shift the conversations from tactical to strategic with autonomous rate optimization.

FinOps teams, in particular, thrive with autonomous rate optimization. Because the financial aspect of cloud operations is being managed using sophisticated algorithms, cloud savings are actively realized in a hands-free manner.

With costs under control, engineers can focus on innovation and resource optimization as it fits into their project schedule.

FinOps teams, too, are freed up to address other top FinOps challenges and plans related to FinOps maturity.

Rate Optimization Provides Faster Cloud Savings Than DIY

In seeking DIY methods of cloud cost optimization, companies may lay some groundwork in understanding cloud costs, but they aren’t likely to find impactful savings. This is largely because there is still manual work associated with both approaches and resource optimization is the focus.

Resource optimization is important, but rate optimization is an often overlooked approach that yields more efficient, impactful cloud savings. We will explore rate and resource optimization further in this guide and explain why the order of optimization action makes a major difference in savings outcomes.

Part 2: Optimizing Engineering Resources in the Cloud

As companies search for ways to optimize costs, they want—and need—data about many different elements, including the amount of money they are spending in the cloud, the savings potential and resources they can eliminate.

It can get complicated fast. To help teams prioritize, it’s a good idea to break down cloud cost optimization into two different universes: engineering and finance.

Usage Optimization vs. Rate Optimization

Engineering teams are actively building and maintaining cloud infrastructure. For this reason, they tend to own resource optimizations.

Rate optimization is about pricing and payment for cloud services. Depending on the organization’s structure and FinOps maturity, a person in IT, finance or FinOps may manage the cloud budget and levers used to achieve cloud savings.

Resource Optimization Happens in the Engineering Universe

Any company can get caught in the vicious cycle of cloud costs. It’s important to understand the implications of resource decisions (made by engineering teams), whether companies are new to the cloud or born in the cloud. Otherwise, they scramble to adjust and react to costs with potentially low visibility and governance.

Companies taking advantage of AWS Migration Acceleration Program (MAP) credits can fast-track cloud migrations with engineering guidance and cost-cutting service credits. Without forethought, resource and cost strategy, and visibility and accountability, there can be sticker shock once the assistance runs out.

Cloud-native companies may understand the cloud and even have costs fairly contained. But sudden, rapid growth or a significant business change means that they have to adjust to unknowns. Costs can rise even with optimization mechanisms in place.

Both situations involve an issue around scale. It takes time for companies to monitor, report and determine how to best optimize cost and resources. As they figure this out, they may be paying higher on-demand rates, which is not cost-effective.

With regards to cloud compute, one area that engineers may feel in command of because of the nature of their work is resource waste. They may use resource deployment and monitoring techniques like the following to use resources more efficiently and manage waste:

- Right-sizing: Matching instance types and sizes to workload performance and capacity requirements. This is challenging to implement in organizations that have rapidly changing or scaling environments because it requires constant analysis and manual infrastructure changes to achieve results.

- Auto-scaling: Setting up application scaling plans that efficiently utilize resources. This optimization technique might be effective for highly dynamic workloads, but it doesn’t offer as much cost savings for organizations with static usage.

- Scheduling: Configuring instances to automatically stop and start based on a certain schedule. This might be practical for organizations with strict oversight and monitoring capabilities, but most basic usage and cost analysis tools make regular predictions challenging.

- Spot Instances: Leveraging EC2 Spot Instances to take advantage of unused capacity at a deep discount. These spot instances might only be useful for background tasks or other fault-tolerant workloads because there’s a chance they can be reclaimed by AWS with just a two-minute warning.

- Cloud-native design: Building more cost-efficient applications that fully utilize the unique capabilities of the cloud. This can require a significant overhaul if an application was migrated from on-premises infrastructure without any redesign.

It takes time and effort to define, locate and actively manage waste. But the surprising thing is that reducing waste doesn’t affect cloud costs as much as one might expect. There is another lever that produces more immediate, expansive cloud savings: rate optimization.

Cloud Rate Optimization: The Financial Side of Cloud Computing

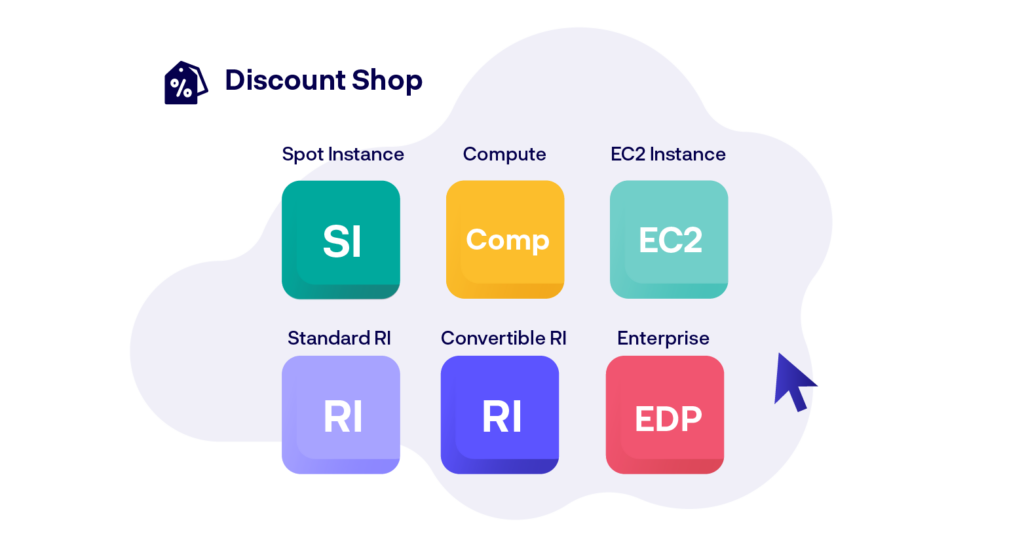

Cloud providers offer various discount instruments to incentivize the use of cloud services. AWS, for example, offers:

- Spot Instance discounts

- Savings Plans

- EC2 Instance

- Compute

- Reserved Instances

- Standard Reserved Instances

- Convertible Reserved Instances

- Enterprise Discount Programs

- Promotional Credits

Spot Instances

Spot Instances provide a deep discount for unused EC2 instances. Spot is a good option for applications that can run anytime and be interrupted. When appropriate, consider Spot Instances for operational tasks like data analysis, batch jobs and background processing.

Savings Plans

Compute Savings Plans offer attractive discounts that span EC2, Lambda and Fargate. They require an hourly commitment (in unit dollars) for a one-year or three-year term and can be applied to any instance type, size, tenancy, region and operating system.

It’s possible to save more than 60% using Compute Savings Plans, compared to on-demand pricing.

While the regional coverage is attractive, commitment terms are fixed with Savings Plans; they can’t be adjusted or decreased once made. This rigidity often leads to under-and over- commitment (and suboptimal savings).

EC2 Savings Plans only offer savings for EC2 instances. They require a one-year or three-year commitment with the option to pay all, partial or no upfront. They are flexible enough to cover instance family changes, including alterations in operating system, tenancy and instance size. But these kinds of changes aren’t very common, and they can negatively affect the discount rate.

Overcommitment is common with EC2 Savings Plans. Standard RIs are viewed as a better option since they produce similar savings and are easier to acquire and offload on the RI marketplace.

Reserved Instances

Reserved Instances (RI) provide a discounted hourly rate for an EC2 capacity reservation or an RI that is scoped to a region. In either scenario, discounts are applied automatically for a quantity of instances:

- When EC2 instances match active RI attributes

- For AWS Availability Zones and instance sizes specific to certain regions

It’s possible to save more than 70% off of on-demand rates with Reserved Instances. They offer flexibility: instance families, operating system types and tenancies can be changed to help companies capitalize on discount opportunities. RIs don’t alter the infrastructure itself, so they are not a resource risk.

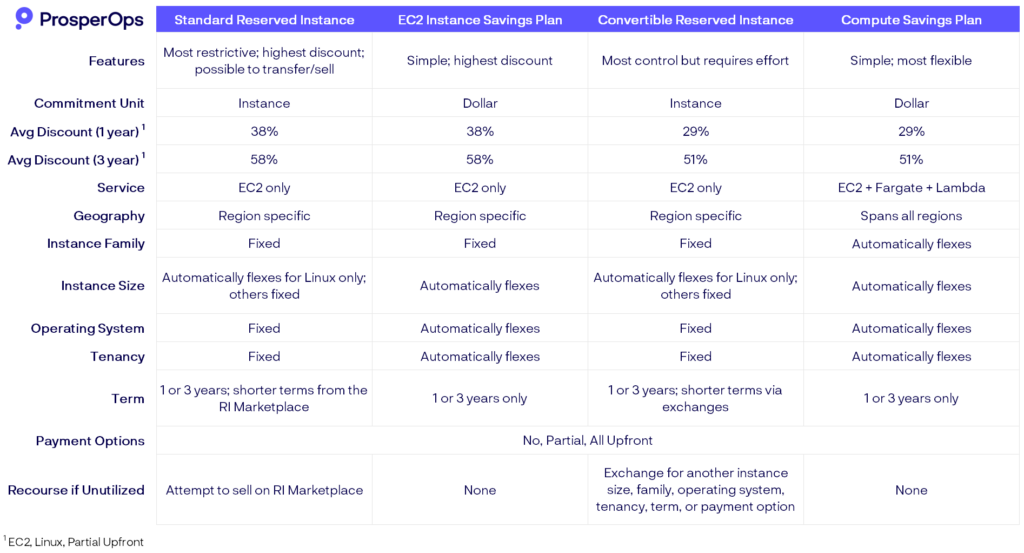

There are two different types of Reserved Instances: Standard RIs and Convertible RIs.

Standard RIs can provide more than 70% off of on-demand rates, but flexibility is restricted to liquidity in the RI marketplace and there are restrictions regarding when and how often they can be sold. They are best used for common instance types, in common regions, on common operating systems.

Convertible RIs can offer up to 66% off of on-demand rates, but their flexibility can actually generate greater savings than other discount instruments due to higher coverage potential. Convertible RIs are:

- Flexible. It’s possible to apply discounts to EC2 instances before rightsizing or executing another resource optimization.

- Offer Greater Amounts of Savings. Even with three-year commitment terms, CRIs can be exchanged. So if EC2 usage changes, it is still possible to maintain EC2 coverage while maximizing an Effective Savings Rate.

- Produce Faster Savings. Commitments can be made in aggregate for expected EC2 usage, instead of by specific instances that may have been forecasted. CRIs enable a more efficient “top-down” approach to capacity planning so that companies can realize greater savings faster without active RI management.

Enterprise Discount Program/Private Pricing Agreements/Promotional Credits

AWS Enterprise Discount Program (EDP) offers high discounts (starting at $500,000 per year)

for commitments to spend a certain amount or use a certain amount of resources over a period of time. Generally, these agreements require a commitment term for higher discounts, typically ranging from two to seven years. They are best for organizations that currently have, or plan to have, a large footprint in the cloud.

It’s common for companies to double down and commit fairly aggressively for discounts using an EDP, but they may not realize how the agreement may limit their ability to make additional optimizations (using additional discount instruments, reducing waste or changing resources).

Overcommitting to high spend or usage before making optimizations is common, so it’s always recommended to negotiate EDPs based on a post-optimization environment. Discount tiers may be fixed, but support type, growth targets and commitment spanning years (instead of a blanket commitment) can—and should—be negotiated.

Part 3: Prioritize Cloud Rate Optimization for Faster and More Cloud Savings

More than 60% of AWS users’ cloud bills come from Compute spend, via resources in Elastic Compute Cloud (EC2), Lambda and/or Fargate. So it makes sense to prioritize optimization in compute—this is where you’ll realize savings most efficiently.

It’s possible to reduce compute costs by more than 40%, while reducing the overall AWS bill by 25%, using RIs and Savings Plans. The trick is to secure the appropriate level of commitment. Both over-committing and under-committing produce suboptimal savings.

Commitment Management is Complex; Focus on Effective Savings Rate

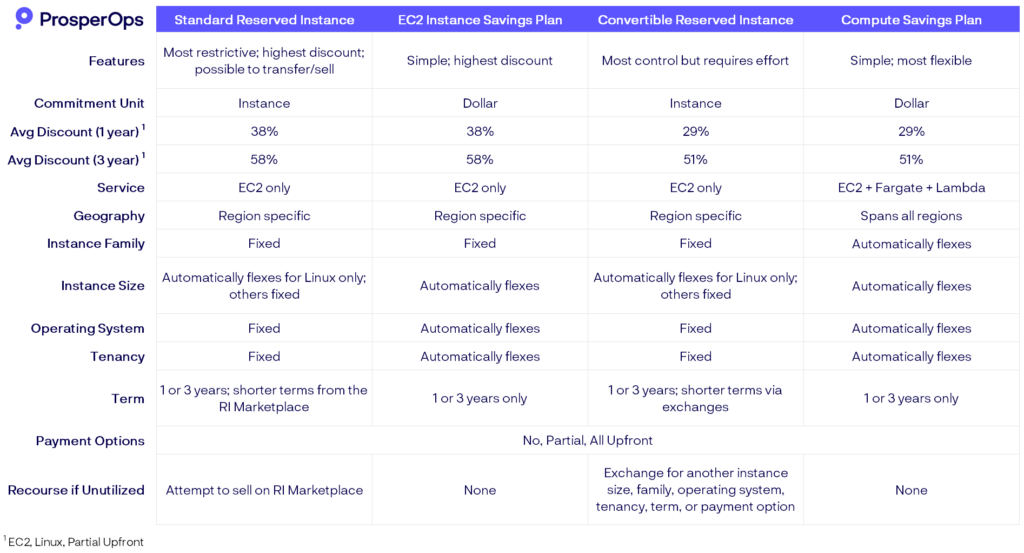

Even though discount instruments are designed to produce savings, companies need to choose the right instrument for workloads to fully realize those savings.

All discount instruments have benefits, tradeoffs and specific rules. This chart illustrates the elements.

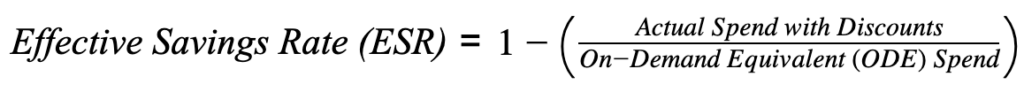

How do these elements relate to cloud savings? They can be easy to misinterpret. That’s why optimizing for Effective Savings Rate (ESR) is a recommended best practice. ESR simplifies rate optimization; it focuses FinOps teams on one metric that reveals the savings outcome.

ESR is the percentage of discount being received. It is calculated by dividing the amount spent using discounts (like RIs and Savings Plans) by the amount that would have been spent via on-demand pricing.

Because utilization, coverage and discount rate are part of the calculation, it produces a consistent measure of savings performance and a reliable benchmarking metric.

Best Practice for Cost Optimization: Rate First, Then Resource

Because cloud cost optimization is complicated, it’s helpful to organize it in these buckets and track:

- How much you are spending by monitoring cloud spend per month

- Savings potential by tracking Effective Savings Rate

- Waste reduction / other resource optimization strategies like re-architecting, tracking untagged/unknown spend, rightsize, unused/unattached resources

Optimize Rate and Resource at the Same Time with Autonomous Discount Management

Discount instruments contain many moving parts and they are complex to manage manually.

Using automation in an autonomous approach, however, creates a more efficient rate optimization experience. It enables “hands-free” management of cloud discount instruments. With cloud rate optimization being managed in a way that produces incremental, consistent savings, engineering teams can focus on innovation and resource optimization synchronously.

Optimize Cloud Discount Rates and Engineering Resources at the Same Time

While prioritizing cloud cost optimization around discount rates is a way to jump-start cloud savings, it’s possible—and preferable—to optimize discount rates and engineering resources at the same time.

When discount rates are managed using algorithms, it creates intra-team efficiency for FinOps. Not only are challenges mitigated with the manual management of discount instruments, but engineering teams are also freed up to focus on strategic projects and resource optimization. It’s a scenario that produces maximized cloud savings.

Why is an Autonomous Approach Necessary to Optimize Discount Rates?

There are certain jobs that complex algorithms perform better than humans. Cloud cost management is one of them. More specifically, calculations performed by sophisticated algorithms enable a more efficient, accurate and responsive approach: the autonomous management of discount instruments.

The concept of saving money using AWS Savings Plans and Reserved Instances (RIs) appears simple. But it is challenging to manage and exchange these instruments in a way that provides coverage flexibility. Each instrument contains benefits, limitations and tradeoffs, along with inherent challenges due to infrastructure volatility, commitments and terms.

Automation in the form of algorithmic calculations handles these intricacies efficiently.

Infrastructure Volatility: a Wild Card

Resource usage is dynamic in the cloud, and that movement creates unpredictable patterns whether it manifests as:

- Increasing and decreasing usage

- Moving from EC2 instances to Spot

- Switching between instance families

- Converting from EC2 to Fargate

- Moving to various containers

These types of engineering optimizations create volatility in company infrastructure and are challenging to match at scale, particularly when rigid discount commitment terms are in place.

Discount Commitment Rules and Coverage Planning

Two things that might not be obvious about working with discount instruments: optimization efforts can be hindered by commitment rules and challenges in discount coverage planning.

Compute Savings Plans, for example, are applied in a specific order: first, to the resource that will receive the greatest discount in the account where Savings Plans are purchased.

The discount benefit can next float to other accounts within an organization, but Savings Plans are not transferable once deployed in an account. In order to maximize benefits to an organization and centralize discount management, it is a best practice to purchase savings plans in an account isolated from resource usage.

Coverage commitment planning for Savings Plans, too, is tricky because commitments are made in post-discount dollars—an abstract concept. FinOps teams must quantify upcoming needs (using post-discount dollars) for resources that contain variable discounts. It’s comparable to estimating a gift card amount for products with varying discount rates that will be bought, exchanged or returned.

Rigid Terms and Lock-In Risk

Companies can get locked into commitment terms that end up creating more risk than benefit.

AWS discount instruments, for example, are procured in 12-month or 36-month commitment terms. While Convertible and Standard RIs can be exchanged (the latter on the RI Marketplace), Savings Plans are immutable. Once made, these terms cannot be modified. They must be maintained through the end of the term.

Most companies respond to these constraints by under-committing their coverage. In an effort to be conservative and avoid risk, they incur on-demand rates, which are much higher.

Manual Management of Discount Instruments Produces Suboptimal Outcomes

It’s nearly impossible to execute all of these moving parts in a timely manner without a technical assist.

Companies that try to manage discount instruments manually, or via a pure play RI broker generally wind up with:

- Discount mismanagement

- Missed savings opportunities

- Overcommitment

This media company, for example, was locked into one-year discount rates and did not seek the opportunity to secure more favorable three-year rates. Higher discounts were missed. Because discount changes were being performed manually (and therefore at a slower pace), the company paid for commitments that were unutilized. With suboptimal coverage and discounts, they paid higher prices for cloud services, missing out on $3 million in potential savings.

This dynamic is very common.

Automation, however, is only part of the solution.

Autonomous Discount Management: Algorithms Enable Hands-Free Optimization

Many automated tools simplify steps or entire process sequences. Most will provide recommendations or a list of actions that require human intervention to implement. While that has value, particularly in saving time, cloud rate optimization (or discount management) is achieved holistically, with automation that performs algorithmic calculations and uses real-time telemetry to:

- Recognize resource usage patterns and scale up and down to cover them

- Autonomously manage and deploy discounts using a blended portfolio of Savings Plans, Standard Reserved Instances and Convertible Reserved Instances, taking into account the benefits and risks of each instrument

- Optimize for savings performance using Effective Savings Rate (ESR) and not for coverage or utilization alone.

Autonomous Discount Management is a hands-free experience that enables synchronous rate and resource optimization. FinOps teams can let the autonomous solution act for them (optimizing cloud costs) while they pursue other strategic tasks; engineering teams can optimize resources at the same time.

How Drift Optimizes Rate and Resources Synchronously

Like most companies, Drift had established internal methods to understand AWS costs and optimization performance. Savings, however, were still elusive, because Drift lacked visibility into cost drivers, appropriate tools—and discount instruments were being managed manually.

Consequently, Drift was receiving a 27% discount with only 57% coverage of discountable resources.

With a tool that provides cost visibility and attribution from Cloud Zero and another tool executing autonomous discount management from ProsperOps, Drift was able to optimize rates and resources synchronously. Drift’s ESR nearly doubled and more than $2.9 million in savings has been returned to its cloud budget in only a few months.

Drift can now:

- Identify optimization opportunities and proactively respond to anomalies in real time

- Review reports in minutes, not hours, with data about costs, usage, coverage, discounts and overall cloud savings performance

- Understand ESR improvements over time (savings performance and ROI) and their drivers

- Continue to realize incremental savings

Engineering and finance teams also have more efficient communication and coordination. This is just one example of what is possible when rate and resource optimization is synchronized.

Synchronous Optimization Addresses Top FinOps Challenges

The right tools and FinOps-supportive culture are key to achieving synchronous rate and resource optimization—and results like Drift’s. It’s a “better together” approach that helps teams

resolve key FinOps challenges depicted in The State of FinOps 2023 report, including:

- Empowering engineers to take action on optimization

- Getting to unit economics

- Organizational adoption of FinOps

- Reducing waste or unused resources

- Enabling automation

The approach produces greater visibility into costs across the organization, a fast way to reduce costs and create savings, more time for engineering projects and improved intra-team communication.

Start a More Efficient Cloud Cost Optimization Journey with ProsperOps

We believe cloud cost optimization can be best addressed by first implementing autonomous rate optimization and working with respected vendors for specific resource optimization support.

A free Savings Analysis is the first step in the cost optimization journey; it reveals savings potential, results being achieved with the current strategy and optimization opportunities. To chart a course for maximized, consistent, long-term cloud savings, register for your Savings Analysis today!