AWS dominates the cloud market with over 200 fully featured services across compute, storage, database, analytics, and more. Its scale, innovation pace, and global infrastructure make it the go-to platform for startups and enterprises alike.

But with great flexibility comes a serious downside: cost unpredictability.

And it’s not AWS’s fault or your team’s. It’s the shift from fixed, centralized procurement models to decentralized, on-demand infrastructure. Unlike traditional data centers, where resource provisioning followed rigorous approvals and long-term planning, AWS puts power in the hands of engineers. Spin up a fleet of EC2 instances in seconds? Easy. Forget to shut them down? Also easy.

This shift accelerates delivery, but it also leads to cloud waste, budget overruns, and mounting pressure from finance. The 2025 State of FinOps Report found that more than 40% of organizations still say workload optimization and waste reduction are their primary focus.

This is where AWS cost optimization comes in.

It’s not just about cutting AWS spend, it’s about gaining control. When done right, optimization shifts your organization from reacting to surprise bills at the end of the month to proactively planning, tracking, and aligning cloud spend with actual business goals. It gives engineering and finance teams shared visibility, replaces guesswork with data, and ensures every dollar spent in AWS is intentional.

In this guide, we’ll break down the basics of AWS cost optimization, practical strategies to optimize both usage and pricing, and the tools that help you stay ahead of the curve. Read on!

What Is AWS Cost Optimization?

AWS cost optimization is the practice of improving the efficiency of your cloud spend across services, environments, and teams. It’s about ensuring that every dollar spent in AWS drives measurable business value by reducing waste, right-sizing resources, improving purchasing decisions, and increasing financial accountability.

With hundreds of services, flexible pricing models, and decentralized teams deploying resources in real time, AWS environments can quickly become complex and costly. Cost optimization brings structure to that complexity, helping organizations avoid overspending, align costs with budgets, and make cloud a sustainable, scalable investment.

Why Is AWS Cost Optimization Needed?

The shift from traditional infrastructure to cloud computing has redefined how organizations spend on technology. In the past, hardware procurement followed a structured process — costs were forecasted, budgets were approved upfront, and ROI was evaluated before deployment. CTOs and CFOs worked together to plan capital expenditures months in advance.

But with the flexibility that cloud services brought, procurement became instant. Teams can provision resources in seconds, with little to no financial gatekeeping. This agility supports innovation, but it also introduces unpredictable, fast-moving spend. A few extra clicks in the console can lead to thousands of dollars in unexpected charges, without clear visibility or accountability.

This fundamental shift puts pressure on engineering, finance, and leadership teams to align in real time. AWS cost optimization is no longer optional, it’s essential for bringing structure to dynamic spending. It enables businesses to maintain control, forecast more accurately, and align costs with business goals without slowing down innovation. Optimization, done right, ensures that flexibility doesn’t come at the cost of financial chaos.

Benefits of AWS Cost Optimization

Here’s a look at the main benefits of AWS cost optimization:

Lower costs

Effective cost optimization reduces spend by identifying idle, underused, or misaligned resources. This helps organizations avoid unnecessary charges and redirect budget toward higher-impact initiatives.

Improved resource efficiency

Optimization efforts like rightsizing and workload scheduling ensure that compute, storage, and other AWS services are used more efficiently, helping to maintain performance without overspending.

More accurate budgeting and forecasting

With better visibility into usage trends and spending patterns, teams can forecast future cloud costs more reliably. This improves budget planning and reduces the risk of mid-cycle surprises.

Clear cost accountability

When resources are properly tagged and costs are allocated accurately, it becomes easier to assign spending to specific teams or projects. This supports internal chargebacks, encourages responsible cloud usage across departments, and makes it easier to evaluate the ROI of cloud investments by connecting spend directly to outcomes.

Stronger cross-functional alignment

Cost optimization brings finance, engineering, and operations into regular collaboration. Shared metrics and joint reviews improve decision-making and ensure cloud investments align with business goals.

Understanding AWS Pricing Models

A core pillar of AWS cost optimization is choosing the right pricing model. With over 200+ fully featured services and a pay-as-you-go model, AWS offers flexibility but also complexity. Without a clear understanding of how each pricing model works, it’s easy to misalign workloads and waste spend. That’s why understanding AWS pricing structures is foundational to optimizing cost and performance.

Here’s a breakdown of the primary AWS pricing models and when each makes sense:

On-Demand

On-Demand pricing is the most straightforward pricing option offered by AWS and it’s also the most flexible. With On-Demand pricing, you only pay for the resources you provision. In other words, you’re not locked into any long-term commitments, and you don’t have to pay up-front costs either. This makes On-Demand pricing a great fit for unpredictable workloads.

But if your workloads are predictable, then On-Demand pricing can become unnecessarily expensive over time. Instead, look for pricing models that offer discounts for long-term, sustained usage patterns.

AWS Savings Plans

AWS Savings Plans offer up to 72% off compared to On-Demand pricing in exchange for committing to a consistent amount of usage (measured in $/hour) over a 1- or 3-year term. They require less management than Reserved Instances, since you can change resource configurations such as instance type, family, OS, or region (within the same plan type).

However, once a Savings Plan is purchased, it cannot be modified after the 7-day return period. You must wait for the term to expire before making changes or unlocking further cost reductions.

AWS Reserved Instances

AWS Reserved Instances are the oldest commitment-based discount mechanism offered by AWS. They offer the same level of savings as Savings Plans, up to 72% compared to On-Demand pricing, in exchange for committing to specific instance attributes such as type, region, and term (one or three years).

While traditionally seen as an instrument suited only for predictable workloads, ProsperOps views RIs differently. With the right automation in place, RIs can be actively exchanged and managed to adapt to changing usage patterns, making them just as viable in dynamic environments. When paired with a strategic blend of commitment types, they continue to be a powerful tool for cost optimization.

AWS Spot Instances

Spot lets you access spare EC2 capacity at up to 90% off. But capacity isn’t guaranteed. AWS can reclaim the instance with little notice, so Spot is ideal for fault-tolerant, interruptible workloads like batch processing or ML model training.

Choosing the right pricing model for each workload is what separates reactive cost control from proactive cost optimization. To go deeper into how each model works and how to evaluate them, check out our complete AWS pricing guide.

Native Tools for AWS Cost Optimization

AWS provides a range of built-in tools designed to help teams monitor, analyze, and reduce cloud spending. Here’s how each tool supports key cost optimization efforts:

AWS Cost Optimization Hub

The AWS Cost Optimization Hub consolidates cost-saving recommendations from across AWS services into a centralized view. It includes rightsizing, idle resource detection, and Reserved Instance or Savings Plan purchase suggestions, all tied to projected savings and prioritized by impact.

AWS Trusted Advisor

AWS Trusted Advisor inspects your AWS environment and provides real-time recommendations across five categories: cost optimization, performance, security, fault tolerance, and service limits. While cost optimization is a key focus, flagging idle resources, rightsizing opportunities, and Reserved Instance suggestions, there are often savings hidden in other categories as well.

For example, performance checks might identify inefficient EBS configurations or overprovisioned instances that directly impact both cost and system efficiency. By reviewing all Trusted Advisor checks regularly, teams can identify opportunities to reduce spend, improve reliability, and strengthen security all from a single dashboard. This is useful for teams looking to tighten control over infrastructure without manual effort.

To enhance its recommendations, you can opt into AWS Compute Optimizer, allowing Trusted Advisor to display deeper insights based on real usage patterns.

AWS Compute Optimizer

AWS Compute Optimizer uses machine learning to recommend optimal EC2 instance types, EBS Volumes, ECS on Fargate and Lambda Functions based on past usage.

It highlights overprovisioned or underutilized instances and provides concrete rightsizing suggestions, including whether to switch to newer generations or instance families for better price performance.

AWS Billing and Cost Management

Image Source: AWS Blog

AWS Billing and Cost Management is the central hub for tracking and managing cloud spend. It includes tools like Cost Explorer, Budgets, and Anomaly Detection to help organizations stay on top of their AWS costs.

Cost Explorer allows you to visualize and analyze your cost and usage data over time. You can break down spend by service, account, tag, or linked resource, and build custom reports to monitor trends or identify cost drivers. It also includes basic forecasting features and pre-configured views to help uncover savings opportunities.

In addition to visualization, the broader Billing and Cost Management suite supports budget enforcement, alerts, and anomaly detection, making it easier to detect unexpected spikes and evaluate the impact of optimization efforts. It’s a foundational toolset for maintaining visibility and accountability across your cloud environment.

AWS Budgets

AWS Budgets enables proactive cost control. You can set custom spend or usage thresholds and receive alerts via email or SNS when nearing or exceeding those limits. Budgets also allow for automated actions like restricting resource provisioning if costs breach defined rules, which helps prevent runaway spend before it happens.

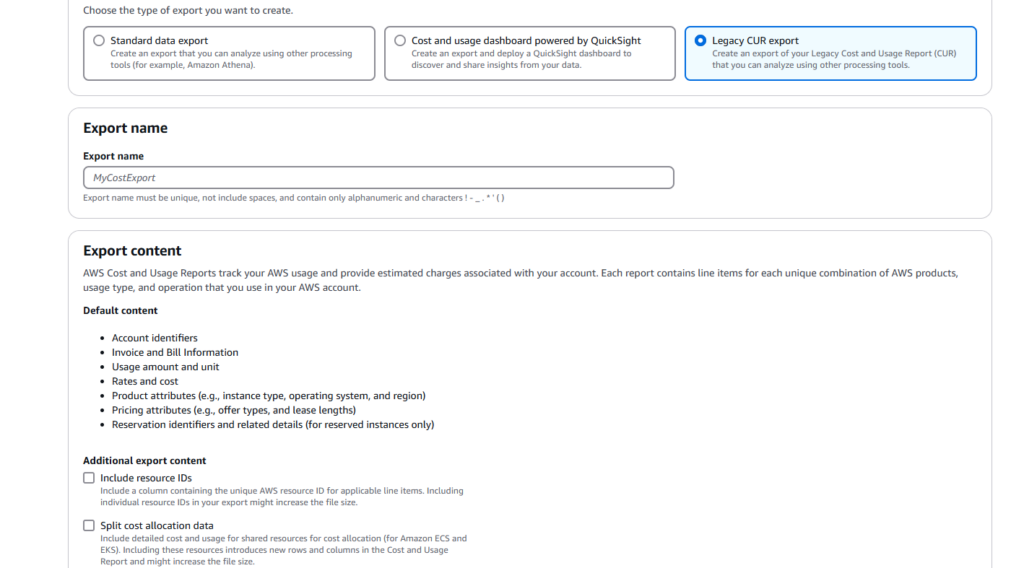

AWS Cost and Usage Report (CUR)

AWS Cost and Usage Report (CUR) delivers granular, line-item cost and usage data that can be exported to an S3 bucket for further analysis. It’s essential for teams that need detailed insights to build custom dashboards, track unit economics, or integrate with FinOps platforms.

CUR data can be exported to an S3 bucket and analyzed using native AWS tools like Athena and QuickSight, enabling advanced querying, visualization, and reporting. As the most detailed source of billing data available in AWS, CUR serves as the foundation for deep cost transparency and customized analytics.

AWS Pricing Calculator

AWS Pricing Calculator acts as a pre-deployment planning tool that helps estimate the cost of AWS services based on your architecture. It’s useful for forecasting and modeling different infrastructure setups before provisioning anything.

AWS Cost Optimization Best Practices For 2025

AWS offers flexibility and scalability, but without a structured approach, costs can quickly spiral. Optimization isn’t just about cutting corners — it’s about making intentional, informed decisions that align spend with business value.

Below are top proven strategies to help you reduce cloud waste, improve efficiency, and manage AWS costs proactively. These are not one-time fixes but ongoing practices that should be embedded into your operational workflows across engineering, finance, and leadership.

1. Leverage tagging, showback, and chargeback for cost allocation

You can’t optimize what you can’t attribute. Cost allocation helps you track which teams, projects, or environments are driving cloud spend. Start by enforcing consistent tagging. Every resource should include metadata like team, environment, and application. Use AWS Tag Policies to standardize and AWS Config to flag missing tags.

Once tags are in place, use showback to report actual usage by team or business unit. Even if there’s no billing impact, visibility alone drives more responsible usage. For more mature organizations, move to chargeback by allocating actual costs back to teams and tracking them against budgets.

Tools like AWS Billing Conductor and the Cost and Usage Report can support both. Without allocation, cost visibility stays stuck at the account level. With it, teams gain ownership, and cost optimization becomes a shared responsibility, not just a finance task.

2. Map workloads to the right pricing models

AWS offers a range of pricing models including On-Demand, Savings Plans, Reserved Instances (RIs), and Spot Instances, each suited to different workload behaviors and business needs. The key to cost efficiency is understanding these options and applying them strategically based on how workloads evolve over time.

Rather than defaulting to On-Demand or sticking to a single model, organizations should segment their workloads and align them to the most suitable pricing constructs. The most cost-effective way to purchase resources from AWS will depend on various ever-changing factors. This requires cost-conscious companies to utilize a range of pricing models and continually update which ones they use for different workflows.

The key is workload segmentation. Classify workloads by volatility and duration. If you’re managing this manually, use Savings Plans for dynamic but consistent baseline workloads. Use Reserved Instances for locked-in, predictable services such as production databases.

It’s also good to mix some three-year commitments with one-year commitments, but track utilization regularly to avoid overcommitting. If your team lacks the bandwidth for this, automate it through a platform that dynamically adjusts coverage in near real time, like ProsperOps. This ensures you maximize your savings while minimizing risk and human intervention.

3. Right-size continuously based on actual usage

Right-sizing is one of the most effective but underutilized AWS cost levers. Use AWS Compute Optimizer or CloudWatch metrics to monitor CPU, memory, and network usage across EC2, RDS, and EBS volumes. Identify resources that consistently run below 40% utilization or that spike infrequently and can tolerate a downgrade.

Beyond EC2, review storage tiers and database configurations. Many RDS instances are overprovisioned with burstable capacity or left running during non-business hours. Schedule start/stop windows for dev and test environments using Lambda scripts or AWS Instance Scheduler.

Right-sizing should not be a quarterly task — it should be built into every sprint or release cycle, with developers receiving cost feedback as part of their delivery pipeline.

4. Use auto scaling and load balancing to match demand

Auto scaling allows your environment to adapt in real time, scaling compute capacity up or down based on demand. This eliminates the need to provision for peak load at all times.

Start by identifying workloads with predictable usage curves — ecommerce traffic, scheduled jobs, internal dashboards and define policies that adjust instance count based on CPU or request metrics.

Pair Auto Scaling with Elastic Load Balancing (ELB) to distribute traffic across healthy instances. This combination ensures you’re not only avoiding overprovisioning but also increasing fault tolerance and performance.

For stateless applications, enable aggressive scale-in policies to avoid idle compute. Review scaling policies regularly to ensure they reflect actual usage trends and seasonal spikes.

5. Audit and act using AWS native tools

AWS cost optimization isn’t a one-and-done exercise. Instead, it’s an ongoing process with a constantly shifting goalpost. You can use AWS native tools like AWS Cost Explorer, AWS Budgets, and AWS Billing Conductor to regularly monitor your AWS environment and make adjustments as needed. This will allow you to continuously optimize for both cost savings and peak performance.

AWS native tools are a good starting place to monitor, manage, and forecast cloud costs. These tools form the operational baseline of AWS cost optimization and should be integrated into regular engineering and finance reviews.

6. Develop a culture of cost-consciousness

The biggest savings don’t always come from finance, they come from engineers who understand the cost impact of what they deploy. Implement tagging strategies to link spend to environments, teams, or projects. Make sure every resource is tagged at creation, and enforce tagging compliance using AWS Config or Service Control Policies.

You can instruct developers to prioritize cost-effectiveness, but creating a company culture that prioritizes cost-conscious development is a better long-term strategy.

Expose engineers to cost insights early:

- Share per-service and per-team dashboards during sprint reviews.

- Show the cost delta when they choose different instance families or storage types.

- Introduce cost KPIs into performance evaluations where relevant, such as cost per user, per deployment, or per transaction.

The goal is not to limit developer velocity, but to give them the data to make trade-offs. Cost-aware development is a cultural shift, and it starts with making cloud spending transparent and relevant to those who influence it the most.

But just as important: recognize the impact of good cost decisions. When a team right-sizes a workload, automates cleanup of idle resources, or avoids unnecessary spend through better architecture — call it out. Visibility and positive reinforcement are key to reinforcing cost-aware behavior and making engineers feel like partners in financial success, not just consumers of infrastructure.

7. Monitor service-level usage with purpose

Cost anomalies usually start small, until they show up as surprise charges on your bill. Prevent this by implementing detailed, service-level monitoring. Use Amazon CloudWatch to collect and visualize resource-level metrics like CPU, memory, disk I/O, and network traffic. Set custom alarms that trigger when usage deviates from expected patterns, especially for services that scale automatically.

Pair CloudWatch with AWS Cost Explorer or CUR data to link resource behavior to spend. For example, if Lambda execution time doubles overnight, does it reflect increased usage or a code issue? Cross-analyzing performance and billing data helps isolate inefficiencies.

Also, segment your data by environment (e.g., dev, staging, production) to identify environments that are over-allocated but underutilized. Reporting without action is useless, build these checks into operational reviews so teams can respond early.

8. Implement data lifecycle and storage class transitions

Storage costs are often overlooked because they’re less volatile than compute — but they add up over time. Start by auditing your S3 buckets, EBS snapshots, and backups. Identify data that hasn’t been accessed in 90, 180, or 365 days. Use Amazon S3 Storage Lens to understand object age, access frequency, and size distribution.

Set lifecycle rules to transition infrequently accessed data from S3 Standard to S3 Infrequent Access, Glacier, or Glacier Deep Archive. Automate deletion of stale snapshots and redundant backups.

For EBS, delete unattached volumes regularly and archive or export logs that don’t need instant retrieval. Establish clear data retention policies aligned with compliance needs and ensure teams follow them. Document when to archive, when to delete, and who approves exceptions.

9. Use Budgets and Anomaly Detection to prevent runaway spend

Setting a budget after overspending is pointless. AWS Budgets allows you to define cost or usage thresholds before things go off track. Start by creating monthly budgets per account, team, or workload. Set up alerts to trigger at 50%, 80%, and 100% of budget thresholds, not just via email but through SNS or Slack integrations so they’re seen and acted on in real time.

Complement this with AWS Cost Anomaly Detection, which uses machine learning to track unexpected spikes in spend across linked accounts, services, or usage types. Instead of combing through billing reports manually, anomalies are surfaced automatically, giving you early signals that something is off. For example, a misconfigured resource in a dev account triggering 10x normal usage can be flagged within hours, not days.

Together, Budgets and Anomaly Detection shift your cost management posture from reactive to proactive. They enable real-time guardrails around usage, especially important in decentralized teams where engineers can provision resources independently. Make reviewing these alerts part of your team’s weekly standups or sprint health checks to maintain accountability and follow-through.

10. Modernize instance and VM families regularly

Legacy instance types not only cost more per vCPU but also lack performance improvements and networking enhancements offered by newer generations. For example, moving from an M4 to an M6i or C4 to C7g can reduce both cost and latency. AWS frequently introduces new instance families optimized for performance and efficiency, taking advantage of them.

Set a regular cadence (e.g., every six months) to evaluate whether your workloads are running on the latest supported families. Sticking with outdated types like C3 or M3 can limit your cost-saving options, as AWS may no longer offer Reserved Instances or Savings Plans for them, leaving you with on-demand pricing by default.

Use Compute Optimizer to identify instances that can be upgraded for better performance at lower cost. Don’t forget to check compatibility for licenses, security tools, and third-party agents when modernizing infrastructure. Treat modernization as an optimization lever — not just a migration task.

11. Prioritize cost optimization as a core engineering metric

Cost shouldn’t be just a finance problem. It should be treated as a shared operational metric across engineering, DevOps, and leadership. Introduce unit economics like cost per user, cost per project, cost per department, to make spend tangible at the team level. Tie these to OKRs where appropriate.

Adopt a FinOps framework to formalize this alignment. Share monthly cost reviews across technical and business stakeholders. Don’t just report on total spend, report on change. What caused the spike last sprint? What architectural decision avoided $10K this quarter?

Build feedback loops. Include cost in postmortems. Tie architectural design decisions to projected spend. When cost trade-offs are discussed early in planning (not after deployment), engineering teams can move fast without overspending.

To accomplish this, take the time to educate and engage your team on AWS cost optimization. Establish clear cost-efficiency goals and communicate them to your entire organization. Then, check in frequently with regular meetings to track progress towards goals, provide feedback, and recognize achievements. And if action is taken, recognize it. Celebrate teams that reduce waste, improve coverage, or make better architectural decisions. Visibility and recognition reinforce cost-aware behavior across the organization.

12. Measure, iterate, and re-optimize constantly

Cost optimization is not a one-time project, it’s an operational practice. Usage patterns shift, services evolve, and teams change how they work. What was efficient last quarter may now be a source of waste.

Set up a cadence for reviewing cost metrics and optimization impact. Revisit rightsizing, instance selections, and commitment strategies at least quarterly. But don’t stop there. Re-evaluate how you’re tracking and presenting this data. Adjust dashboards, tagging strategies, and reporting views to ensure they reflect current priorities and organizational structures.

Use tagging and CUR data to track changes at the workload or team level. Benchmark your current Effective Savings Rate and track improvements over time.

Establish a clear owner or FinOps lead responsible for driving these reviews. Build a roadmap of optimization goals with measurable KPIs. The organizations that succeed in AWS cost optimization treat it as an ongoing discipline, not a cleanup effort when budgets get tight.

13. Set clear KPIs for cost optimization

Without metrics, cost optimization efforts become subjective and hard to measure. Establishing clear, quantifiable KPIs ensures teams stay focused on impact—not just effort. These KPIs should reflect both financial outcomes and operational behaviors.

Start with high-level metrics like Effective Savings Rate (ESR), cost per workload, etc. Then break them down further into unit economics such as cost per deployment, cost per API call, or cost per customer served. Tag workloads accordingly to support accurate tracking through AWS Cost Explorer or CUR-based reporting.

These KPIs should be reviewed monthly and tied to team-level goals, not just centralized finance dashboards. When optimization is measured and tracked consistently, it becomes a habit, not a side project.

14. Educate and motivate teams around cost ownership

Cost efficiency doesn’t happen in isolation, it requires buy-in across engineering, finance, product, and leadership. But pushing ownership down to teams only works when people understand what’s expected and why it matters.

Start by educating teams on how AWS pricing works, how specific decisions (like overprovisioning or idle resources) directly impact cost, and what tools they can use to monitor their environments. Incorporate cost training into engineering onboarding. Share example cost wins in internal updates.

Motivation follows when teams are recognized for impact. Highlight cost optimizations during sprint demos. Tie meaningful savings to broader product outcomes or customer wins. Make cost awareness a visible, shared success metric, not a burden.

15. Use rate and usage optimization together

There’s a common misconception in cloud cost management: that you need to finish usage optimization before you start optimizing your rates. But is it really possible when your workloads shift by the hour/minute and services evolve constantly, there is no “done.” Waiting for perfect usage alignment before committing to Savings Plans or Reserved Instances just delays savings, and leaves money on the table.

Skipping rate optimization while you endlessly fine-tune usage means you continue paying full price for infrastructure that could be discounted. On the flip side, committing to long-term pricing without addressing major inefficiencies means you’re locking in waste.

The right approach isn’t sequential, it’s parallel. Both levers need to work together. Treat them as an integrated workflow, not two isolated phases. This is the only way to drive sustainable, long-term savings.

16. Make cost automation a default, not an afterthought

Manual cloud cost management doesn’t scale. As environments grow, teams can’t keep up with tracking idle resources, rightsizing instances, managing discount instruments, or enforcing usage policies by hand. What starts as good intentions often turns into missed savings, inefficient provisioning, and delayed action.

Automation eliminates this lag. It ensures cost optimizations happen continuously, not when someone gets around to it. For example, automated scripts can shut down non-production environments after business hours, enforce tagging compliance, or scale workloads based on real-time demand.

AWS Budgets and Anomaly Detection can trigger alerts and actions before spend gets out of control. Lifecycle policies for storage, Auto Scaling for compute, and scheduled reporting workflows reduce manual oversight.

Most importantly, automation enables real-time cost control. When commitments are automatically adjusted, idle resources are reclaimed without delay, and spend alerts are surfaced instantly, your team shifts from reactive clean-up to proactive cost discipline.

This isn’t a “nice to have,” it’s essential for any organization running at scale in AWS. Without it, optimization becomes inconsistent, delayed, and heavily dependent on individual bandwidth. With it, optimization becomes systemic.

You can explore the nuances of automation in AWS cost optimization with ProsperOps’ recent blog on FinOps automation.

Top 3 AWS Cost Optimization Tools You Need To Know

Managing AWS spend at scale requires more than just alerts and dashboards. These three tools help you move from reactive cost control to proactive optimization. Each of these tools offers unique capabilities to help you save smarter and operate with more financial precision.

1. ProsperOps

ProsperOps is an automated FinOps platform that maximizes cloud cost savings by dynamically blending discount instruments like Reserved Instances, Savings Plans and CUDs. Using advanced machine learning and analytics, ProsperOps continuously analyzes cloud usage and optimizes commitment coverage to achieve an Effective Savings Rate (ESR) of 40% or more.

Unlike manual cost management efforts, which require ongoing monitoring and adjustments, ProsperOps offers a hands-free, risk-free approach to AWS cost optimization. It reduces financial lock-in risks, ensures discount instruments are always optimized, and eliminates operational friction, allowing businesses to save without changing their infrastructure or workflows.

Key features

- Autonomous discount management that continuously optimizes discount instruments in near real-time

- Offers commitment management for all 3 clouds: AWS, Google Cloud and Azure

- Frictionless setup with no manual interventions or infrastructure changes required

- Zero-risk pricing model, where you only pay a percentage of the savings generated

2. CloudZero

CloudZero turns raw AWS billing data into business-relevant insights, helping teams understand the cost of building and running software. This makes it best for breaking down AWS costs into business-relevant metrics for better alignment and accountability.

By aligning cloud spend with engineering decisions, it makes cost a shared responsibility. With detailed unit cost metrics and real-time alerts, CloudZero gives finance and product teams the visibility needed to make informed trade-offs.

Key features

- Customizable cost allocation and tagging insight

- Real-time anomaly detection and alerting

- Uses AI-powered anomaly detection to flag unusual spending patterns

- Integrates with ProsperOps for a complete optimization workflow

3. Vantage

Vantage is a comprehensive cloud cost management platform that provides organizations with detailed visibility into their AWS expenditures. Beyond cost tracking, Vantage offers automated cost recommendations, leveraging real-time analysis to identify potential savings opportunities. Its user-friendly interface supports features like anomaly detection and Slack alerts, facilitating prompt responses to unexpected spending changes.

Key features

- Detailed usage breakdowns by account and service

- Daily forecasts and historical trend analysis

- Slack integration for real-time cost alerts

- Supports cost monitoring across multiple clouds

- Customized recommendations for cost-saving opportunities

Automatically Optimize Your AWS Costs With ProsperOps

Managing AWS costs manually is complex, time-consuming, and prone to inefficiencies. While AWS provides native cost management tools, they often require constant monitoring, manual intervention, and deep expertise to extract maximum savings. Without automation, businesses risk missing optimization opportunities, reacting too late to cost spikes, or failing to fully utilize AWS discount programs.

This is where ProsperOps comes in.

Using our autonomous discount management platform, we optimize the hyperscaler’s native discount instruments to reduce your cloud spend and place you in the 98th percentile of FinOps teams.

This hands-free approach to AWS cost optimization can save your team valuable time while ensuring automation continually optimizes your AWS discounts for maximum Effective Savings Rate (ESR).

Make the most of your AWS cloud spend with ProsperOps. Schedule your free demo today!